Summary

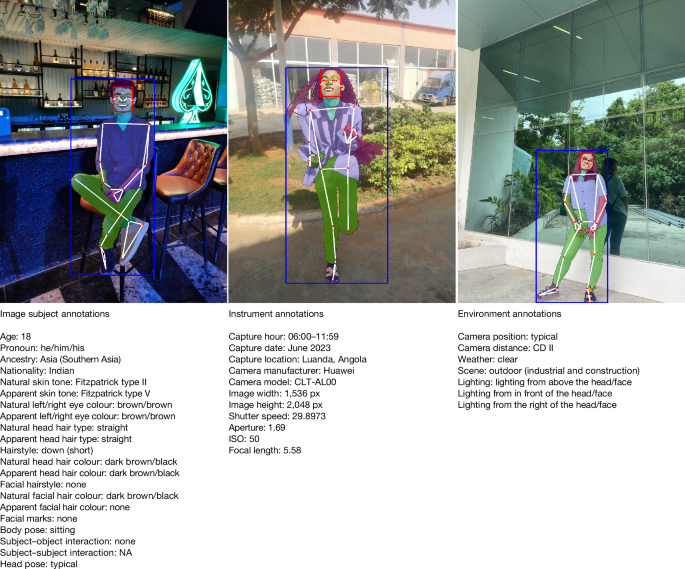

Sony AI released a dataset that tests the fairness and bias of AI models. Its called the Fair Human-Centric Image Benchmark (FHIBE, pronounced like Phoebe). The company describes it as the first publicly available, globally diverse, consent-based human image dataset for evaluating bias across a …

Source: Engadget

Exclusive AI-Powered News Insights (For Members only)

Disclaimer:This content is AI-generated from various trusted sources and is intended for informational purposes only. While we strive for accuracy, we encourage you to verify details independently. Use the contact button to share feedback on any inaccuracies—your input helps us improve!