Summary

Amazon SageMaker AI now supports EAGLE-based adaptive speculative decoding, a technique that accelerates large language model inference by up to 2.5x while main…

Source: aws.amazon.com

AI News Q&A (Free Content)

Q1: What is the main purpose of the EAGLE-based adaptive speculative decoding in Amazon SageMaker AI?

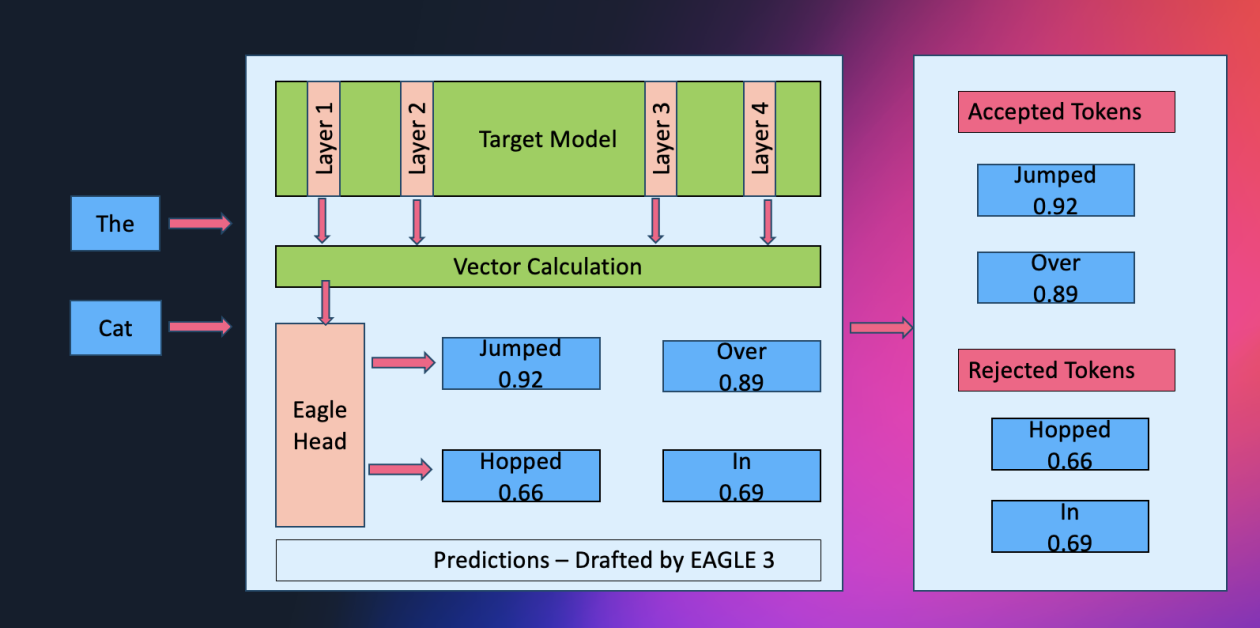

A1: The EAGLE-based adaptive speculative decoding in Amazon SageMaker AI is designed to accelerate the inference process of large language models. This technique aims to enhance the efficiency of generative AI by up to 2.5 times, enabling faster and more efficient processing of complex AI tasks such as natural language processing and machine learning model predictions.

Q2: How does speculative execution contribute to the acceleration of generative AI models?

A2: Speculative execution, as outlined in the Speculative Decoding (SpecDec) approach, involves using a model optimized for drafting potential outcomes and then verifying these drafts efficiently. This draft-then-verify paradigm significantly speeds up the decoding process by allowing the model to predict multiple future states and verify them, leading to a 5x speedup for tasks like machine translation, while maintaining comparable accuracy to traditional methods like beam search decoding.

Q3: What are the additional advantages of using Speculative Decoding beyond speedup?

A3: Apart from the notable speedup, Speculative Decoding offers three additional advantages: improved efficiency in model processing, the ability to handle various seq2seq tasks effectively, and enhanced practical value for real-world applications. These benefits make it a valuable tool for accelerating generative models without compromising on the quality of output.

Q4: In what ways does Speculative Safety-Aware Decoding (SSD) enhance the safety of large language models?

A4: Speculative Safety-Aware Decoding (SSD) enhances the safety of large language models by integrating speculative sampling during the decoding process. It employs a match ratio between the original and a smaller model to assess and mitigate risks associated with jailbreak attacks. SSD dynamically switches between decoding schemes to prioritize either utility or safety, ensuring the model remains helpful while accelerating inference.

Q5: What role does information synthesis play in generative AI models?

A5: Information synthesis in generative AI models involves integrating and reorganizing existing information to provide grounded and precise responses. This capability is crucial in scenarios requiring accurate and contextually relevant information, as it helps mitigate issues like model hallucination and improves the overall reliability and effectiveness of AI-generated outputs.

Q6: How do generative AI models differ from traditional AI models in terms of information access?

A6: Generative AI models differ from traditional AI models through large-scale training and superior data modeling capabilities. They produce high-quality, human-like responses and offer new opportunities for information access paradigms. These models excel in creating tailored content and synthesizing information, enhancing user experience by delivering immediate and relevant outputs tailored to user needs.

Q7: What potential impact does the integration of EAGLE-based decoding have on real-world applications of AI?

A7: The integration of EAGLE-based decoding in AI applications can significantly impact real-world scenarios by making generative AI models more efficient and faster. This improvement can lead to enhanced performance in tasks such as natural language processing, real-time data analysis, and predictive modeling, ultimately resulting in more effective and timely AI-driven solutions across various industries.

References:

- Speculative Decoding: Exploiting Speculative Execution for Accelerating Seq2seq Generation

- Speculative Safety-Aware Decoding